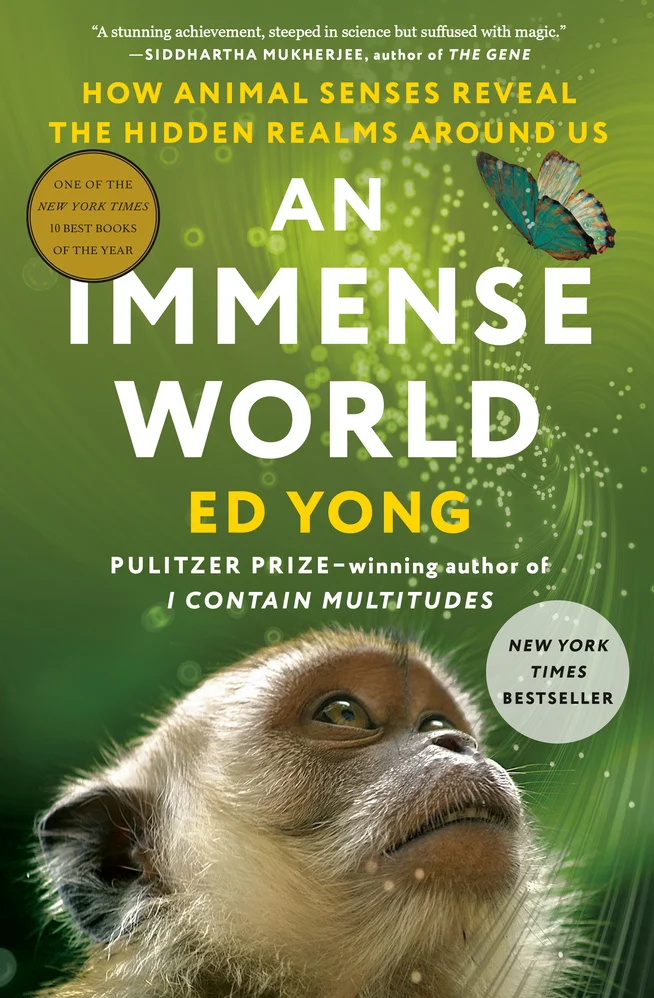

Confession: I’m a big fan of science writer Ed Yong. I followed his work at The Atlantic for years, particularly during the pandemic. His newsletter, The Ed’s Up, is worth reading (bird photos FTW). His latest book, An Immense World: How Animal Senses Reveal Hidden Realms Around Us (bookshop.org link), is both fascinating and exceptionally well-written.

I think the quality of Ed Yong’s work is next level, so when he says something like this, I pay special attention:

“My position is pretty straightforward: There is no ethical way to use generative AI, and I avoid doing so both professionally and personally.”

To be absolutely clear: I am not an expert on generative AI. I am not a user of generative AI. I’m still trying to wrap my head around the idea of generative AI.

These are some of my concerns:

- Do users know what content was used to train the LLM? To be more blunt, was the content stolen?

- Generative AI tends to insert artifacts (again, to be blunt, LLMs can lie — remember this story?). Are users savvy enough to figure out whether or not that is happening, and where?

- At what stage of a project is generative AI considered helpful? Do the expectations of users reflect the intent of developers?

For me, I think that generative AI would not be helpful for doing the bulk of my research; I need my brain to grapple with ideas, in clumsy ways, to find a way through. I feel like trying to use generative AI might add too much noise to an already messy process. I mean, maybe at the very beginning of a project, when I’m trying to wrap my head around something new, prompting an LLM to see if I can identify avenues of research I hadn’t originally thought about?

My husband uses an LLM to generate code snippets when he gets stuck, and that seems like it might be a useful application, because generating useful code requires that you understand what it is you want it to do (but you’re missing some syntactic nuance), and whatever the LLM generates has to be tested and integrated into a larger whole.

On a different front, last week in my travels around social media, I saw this article: ‘AI-Mazing Tech-Venture’: National Archives Pushes Google Gemini AI on Employees.

Whoa. If NARA (National Archives and Records Administration) is working with an AI, that might be a thing, right? It’s not too surprising to discover, as the article points out, that at least some archivists are… wary… of AI, in general, particularly after the organization told them not to use ChatGPT. Some of the cited concerns: accuracy, and environmental impact.

I can’t (and shouldn’t) be trusted to make decisions for anyone else regarding use of generative AI. I, frankly, don’t have enough relevant experience with it to speak with any authority about it. That said, at this stage, if I were going to use an LLM on a regular basis, it would occupy the role of a natural language search engine, one that I don’t entirely trust.